Sports

direct messages on Instagram currently lack encryption, unlike Meta's other platforms, such as Messenger and WhatsApp

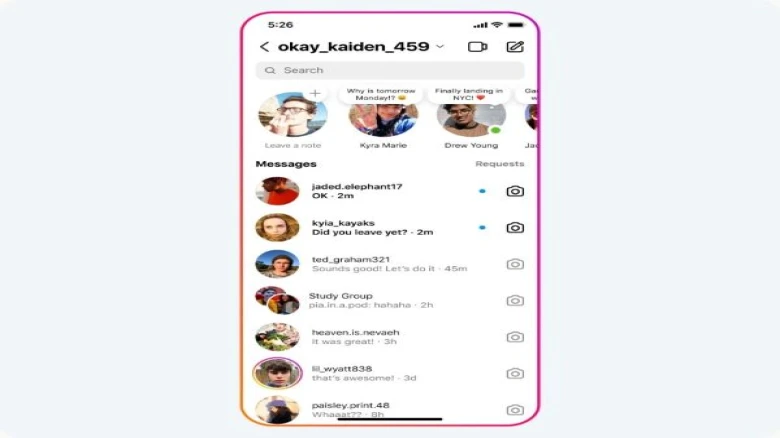

Digital Desk: To enhance teen safety on Instagram, Meta, the parent company, announced plans to test new features aimed at blurring messages containing nudity. This move comes amidst growing concerns over the impact of harmful content on young users' mental health.

The protection feature for Instagram's direct messages will utilise on-device machine learning to analyse images for nudity, with a focus on safeguarding users under 18. Meta intends to enable this feature by default for underage users and will encourage adults to activate it as well. Notably, the analysis of images will occur on the device itself, ensuring privacy even in end-to-end encrypted chats, where Meta lacks access unless images are reported.

While direct messages on Instagram currently lack encryption, unlike Meta's other platforms, such as Messenger and WhatsApp, Meta plans to introduce encryption for Instagram in the future. Additionally, Meta is developing technology to identify accounts involved in potential sextortion scams and testing pop-up messages to alert users who may have interacted with such accounts.

This initiative follows Meta's previous measures to limit sensitive content exposure to teens on Facebook and Instagram, including content related to suicide, self-harm, and eating disorders.

However, Meta faces legal challenges as 33 U.S. states' attorneys general, including California and New York, have sued the company, alleging repeated misinformation about platform dangers. In Europe, the European Commission seeks clarity on Meta's efforts to protect children from illegal and harmful content.

Leave A Comment